15. Softmax

Multi-Class Classification and Softmax

14 Quiz - Softmax

The Softmax Function

In the next video, we'll learn about the softmax function, which is the equivalent of the sigmoid activation function, but when the problem has 3 or more classes.

DL 18 Q Softmax V2

Softmax Quiz

SOLUTION:

expDL 18 S Softmax

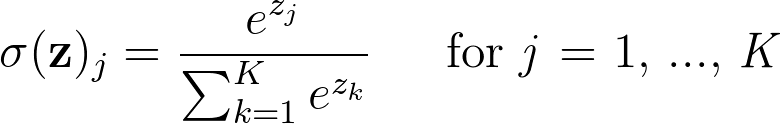

The softmax function you saw in the above video can be expressed in a more concise manner:

where the summation symbol on the bottom of the divisor indicates that we add together all the e^(input z value) elements in order to get our calculated probability outputs.

Quiz

For the next quiz, you'll implement a softmax(z) function that takes in z, a one or two dimensional array of logits. Where logits is the output array for your Wx + b linear function.

In the one dimensional case, the array is just a single set of logits. In the two dimensional case, each column in the array is a set of logits. The softmax(z) function should return a NumPy array of the same shape as z.

For example, given a one-dimensional array:

# logits is a one-dimensional array with 3 elements

logits = [1.0, 2.0, 3.0]

# softmax will return a one-dimensional array with 3 elements

print softmax(logits)$ [ 0.09003057 0.24472847 0.66524096]Given a two-dimensional array where each column represents a set of logits:

# logits is a two-dimensional array

logits = np.array([

[1, 2, 3, 6],

[2, 4, 5, 6],

[3, 8, 7, 6]])

# softmax will return a two-dimensional array with the same shape

print softmax(logits)$ [

[ 0.09003057 0.00242826 0.01587624 0.33333333]

[ 0.24472847 0.01794253 0.11731043 0.33333333]

[ 0.66524096 0.97962921 0.86681333 0.33333333]

]Implement the softmax function, which is specified by the formula shown above.

The probabilities for each column must sum to 1. Feel free to test your function with the inputs above.

Start Quiz: